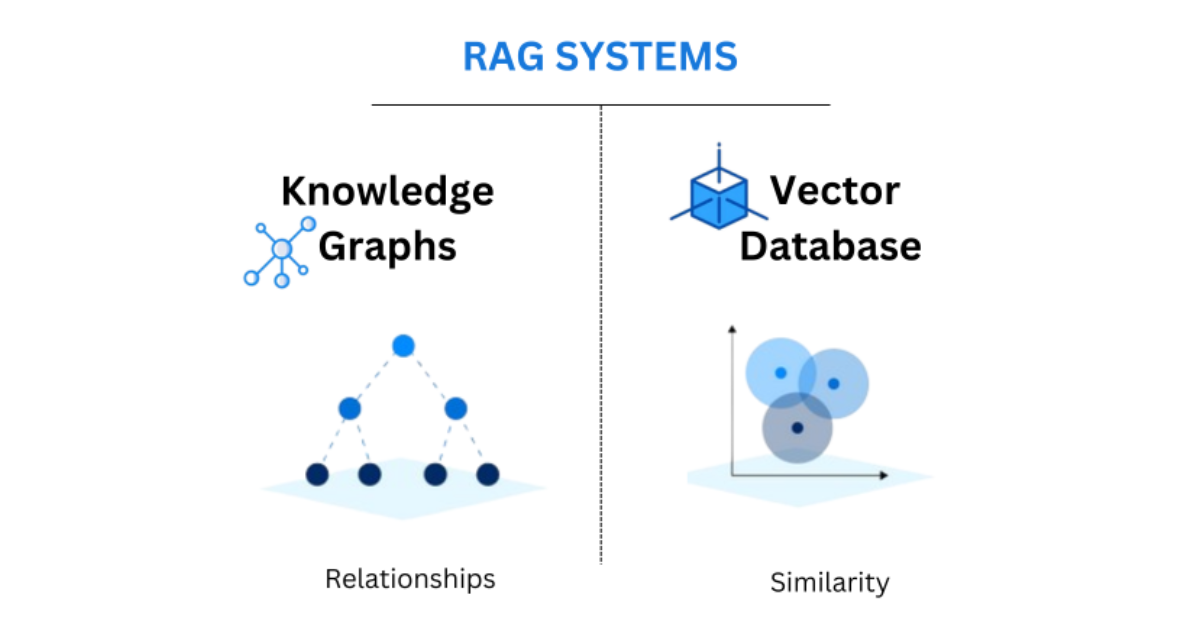

Imagine RAG like searching a library by keywords, generating keyword hits that are then passed to a language model for some dot-connecting. Works well when answers to simple queries are needed, but what happens when linked facts from different places are needed?

GraphRAG structures knowledge into entities and relations, allowing answers to be formed in a more considered and connected fashion. It is like moving away from disorganized index cards to a smart map of relations. This is an excellent option for media search, for whom “who said what when, in what context” matters enormously.

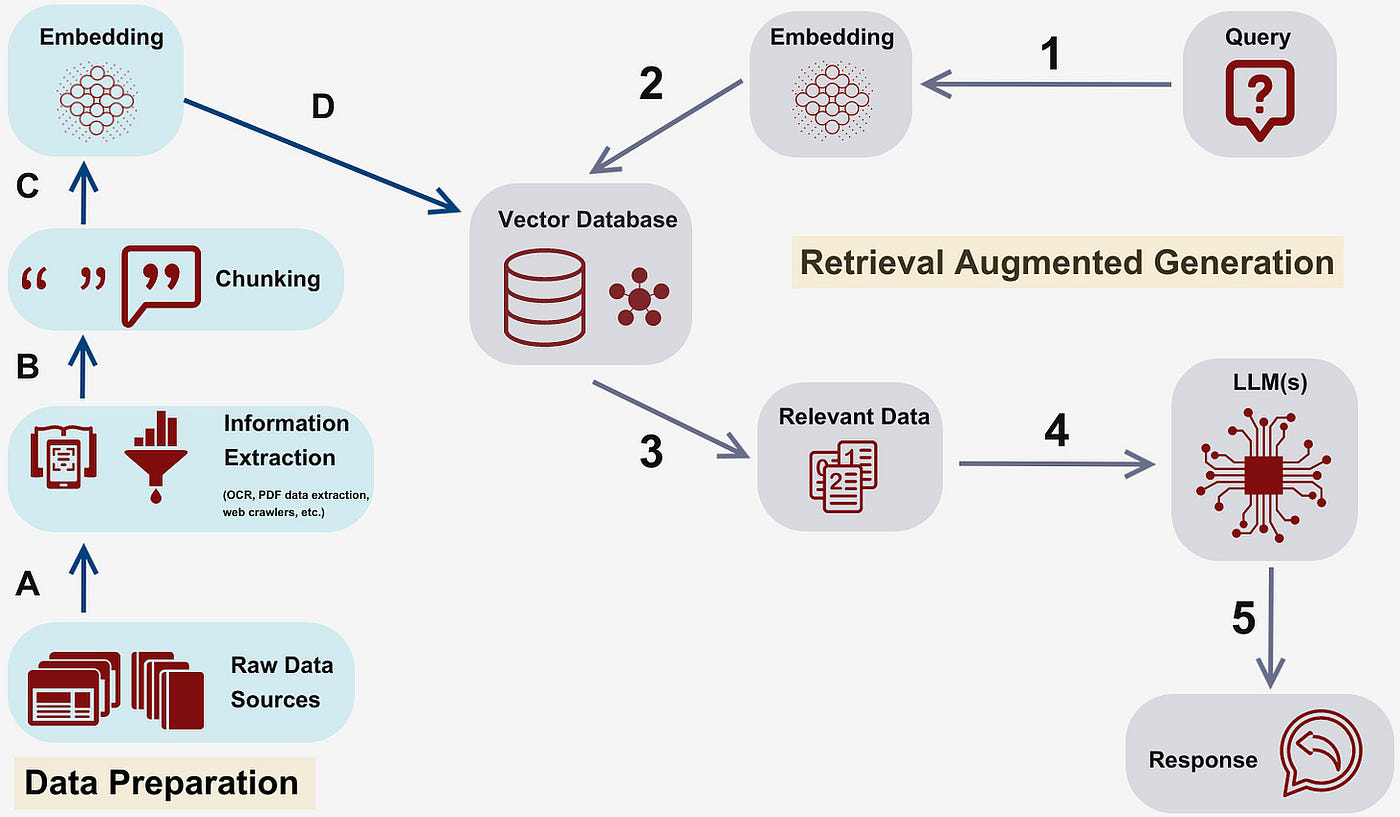

How RAG Works (Briefly):

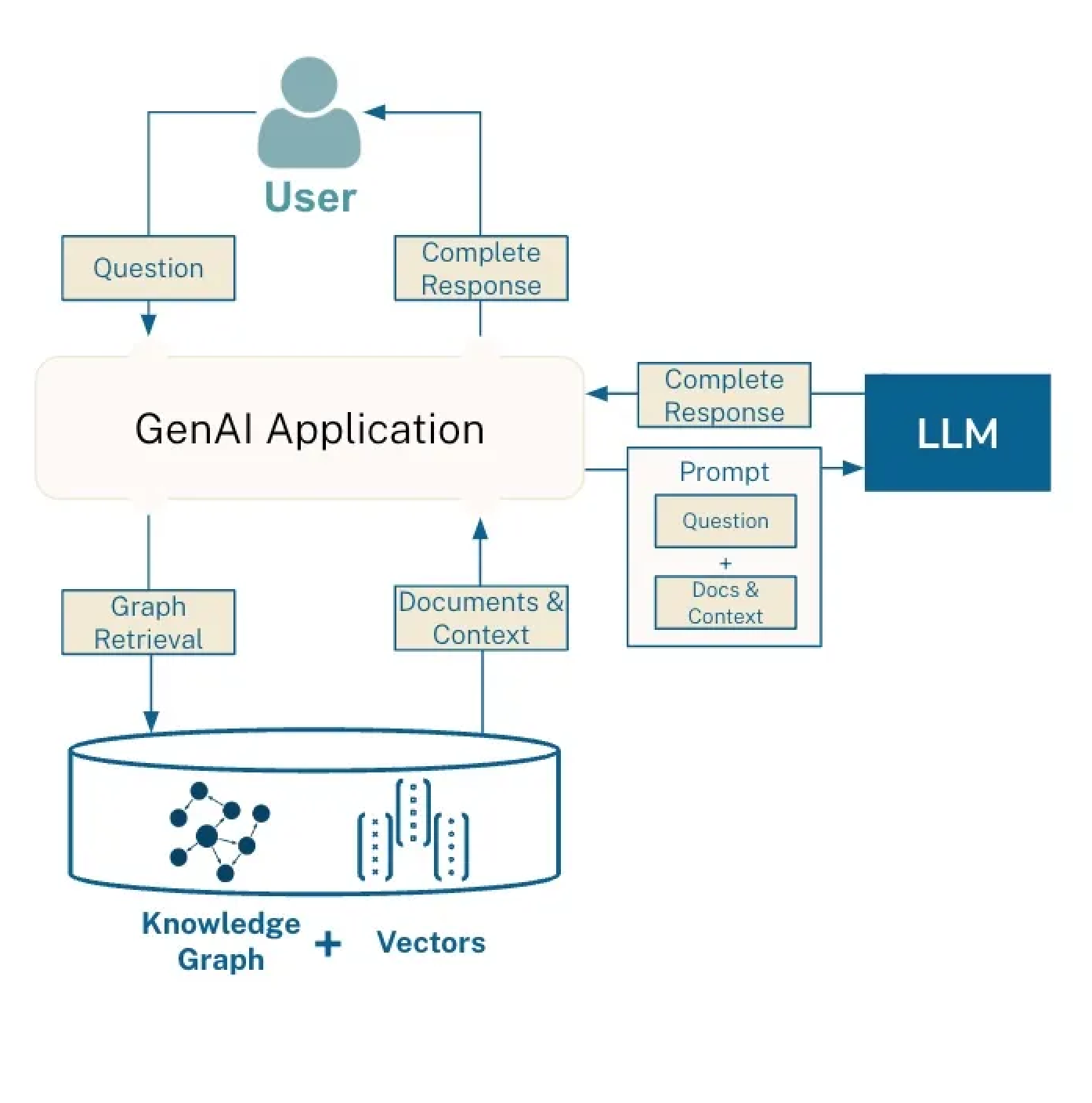

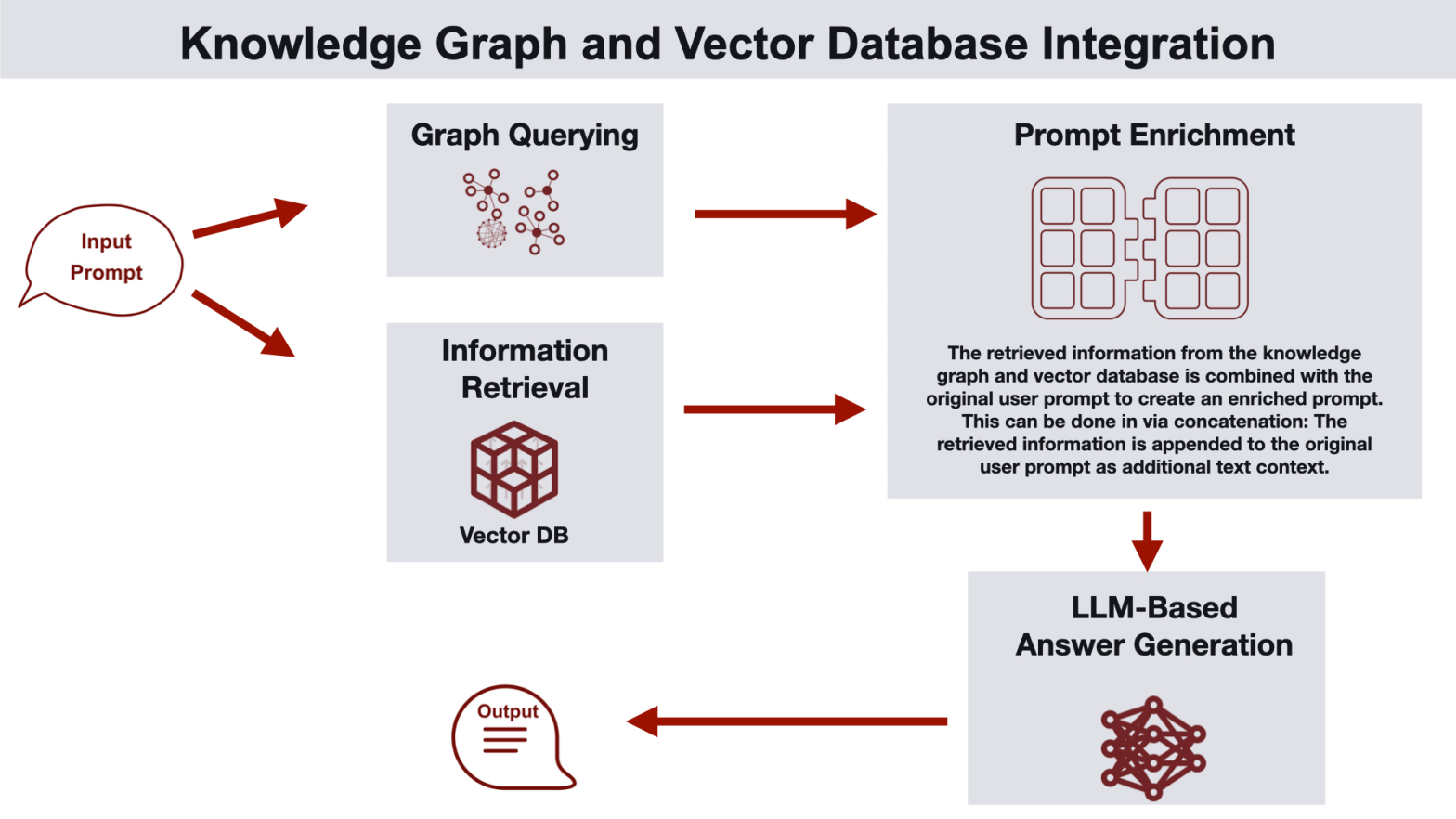

In RAG, chunks of documents are converted into vectors (numerical form). These vectors are then searched against your query by matching the best correspondences. The retrieved chunks are passed into the LLM, together with the user’s question, thus giving an answer based on the given content.

It is excellent for Q&A, but it loses the context when the relationship extends across chunks or when reasoning must follow a chain.

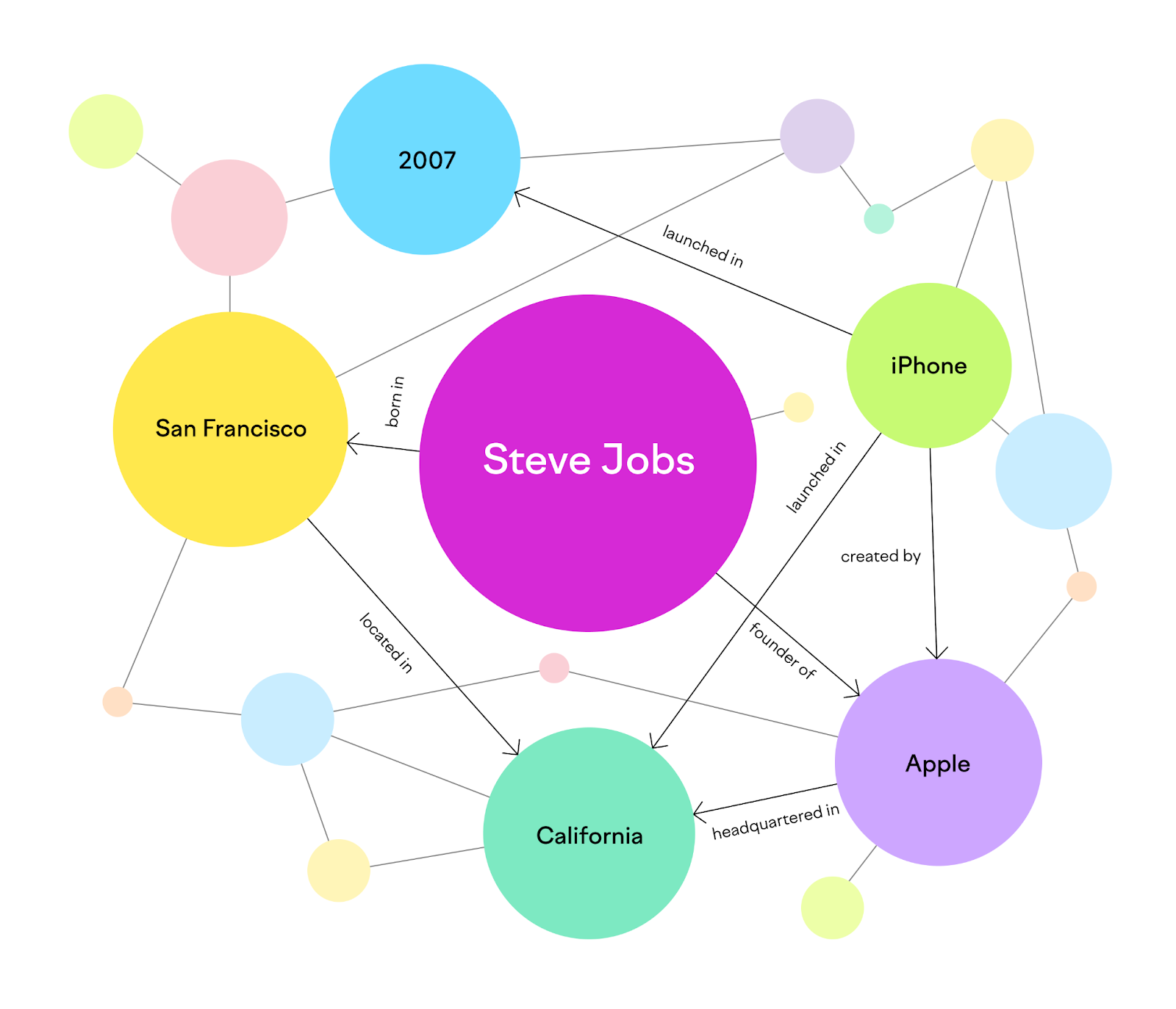

What is GraphRAG – Simply Explained

At a high level, the way GraphRAG works is that it creates the knowledge graph from data sources. So each real-world entity(item/person, event, scene) gets converted into a node.

Then, relationships such as “spoke about”, “appeared with”, “follows” become edge-relations.

Why GraphRAG Outperforms RAG:

With a custom embedding framework, this specifies the semantic search workflow of Gyrus’ solution:

1. Clearer Reasoning (Multi-Hop):

GraphRAG is a multi-step approach:

For instance, “Find all scenes where Alice mentions topic A after event B.” RAG cannot follow that path because it seems, to it, that the chunks are isolated.

2. More Accurate & Trustworthy:

GraphRAG lets one trace reasoning: “Alice node → edge mentions → topic node → clip node.” You can explain in detail how the answer was constructed, making the process far more transparent and worth trusting.

3. Efficient Retrieval:

Instead of issuing irrelevant chunk retrieval, GraphRAG can find the relevant subgraph instead-meaning shorter, faster, and more focused prompt creation.

4. Handles Structured Knowledge Naturally:

Graphs become very useful when knowledge becomes relational, such as timelines, speaker-to-scene associations, or event sequencing. RAG can’t implicitly represent this kind of structure-GraphRAG can.

GraphRAG in Intelligent Media Search:

Here’s how our system unites everything:

- Entity Extraction: Determine who is speaking, what they speak about, which clips correspond to these utterances, and when.

- Graph Building: Nodes = clips/speakers/topics, with edges equal to relations like “spoke in,” “mentioned,” or “followed by.”

- Embedding Graph Parts: Generate vectors for nodes or small subgraphs.

- Query Handling: Keywords such as Messi, goal, scored.. Will be extracted and will do graph traversal to get the relevant context.

- Hybrid Retrieval: Combine graph-contextualization with vector similarity for the best node/subgraph retrieval.

Your end-users will get pinpoint references of clips in proper context and explanation – no more off-topic or fragmentary retrieval.

Clear Comparison: RAG vs GraphRAG:

| Feature | Traditional RAG |

GraphRAG |

| Data Structure | Flat Text Chunks | Knowledge graph: nodes + relationships |

| Retrieval Method | Vector Similarity | Graph traversal+ vector ranking |

| Reasoning | Single – chunk answers | Multi-hop, relational reasoning |

| Explainability | Opaque | Transparent via graph paths |

| Precision | Moderate relevance | Higher-35%+improvement reported in some scenarios |

| Efficiency | Large chunk retrieval, longer content | Focused subgraph retrieval, fewer tokens |

| Best for Queries Like.. | “What is X?” | “who mentioned X after Y, in which clip? |

Steps to Implement GraphRAG (Tech View):

1. Building Entity Relationship (ER) Graphs:

- Use NLP- or LLM-based relation extraction from media: who, what, when.

- Store graph using Neo4j, AWS Neptune, or MongoDB Atlas.

2. Embed Graph Components:

- Create embeddings from nodes/subgraphs for hybrid lookup

3. Retrieval Pipeline:

- Traverse graph for candidate subgraphs.

- Rank by embedding similarity.

4. Retrieval Pipeline:

- Summarize the subgraph: entities, relationships, timestamps.

- Add the top chunks/text snippets

5. Answer Generation:

- The LLM reasons over both structured and unstructured data.

This hybrid pipeline yields context-rich and pinpointed answers.

Real-World Results & Evidence:

- Amazon AWS reports a 35% precision boost using GraphRAG over vector-only RAG.

- Microsoft Research applied GraphRAG to private datasets and saw strong improvements in multi-hop buildup and answering complex queries.

- Benchmark studies (2025) confirm GraphRAG steadily outperforming traditional RAG in multi-hop QA and summarization. The systematic evaluation highlights clear benefits in relationship-heavy scenarios.

Final Thoughts:

GraphRAG transforms media search. It brings structure, clarity, and logic to what used to be fragmented – thus imparting to Intelligent Media Search the ability to:

- Understanding connections and timelines in media.

- Tracing how answers are arrived at.

- Giving precise clip-based results with explanation.

GraphRAG is a game changer for anyone developing intelligent media search tools. Feel free to connect with us at [email protected] if interested in integrating or demoing it with your existing platform!