What holds media companies back now isn’t lack of content. It’s a lack of clarity. When videos pile up across scattered folders, locating one specific clip takes time – no matter how advanced the Media Asset Management system seems. Right where you’d expect efficiency, things slow down.

Storage, organization, and permissions – that’s what most Media Asset Management platforms handle smoothly. Yet their video search tools lag behind. Finding files often means relying on tags, titles and manually entered metadata. If details are skipped or messy, good luck spotting the file later.

Meaningful searches? Rarely a priority from the start. Hidden content becomes normal when data is thin. Some call it inefficient. Others just accept it. Not every platform treats discovery like core functionality.

Strong storage doesn’t guarantee smart retrieval. Clarity fades fast without structured input. Video search stays weak because design choices long favored structure over findability. A gap remains wide despite advances elsewhere. Useful results demand more than filenames.

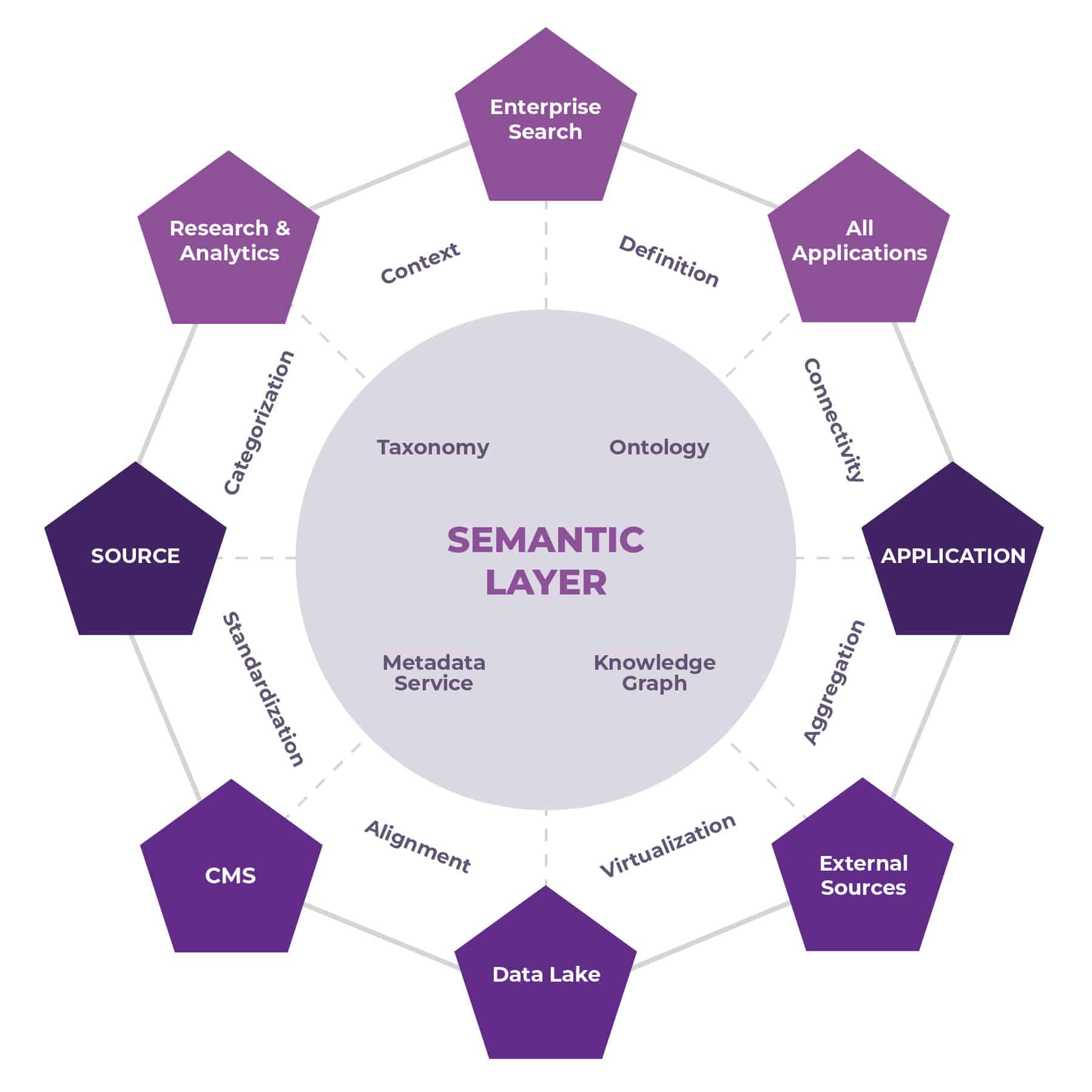

Here’s the thing about Semantic Video Search – it has to connect across every MAM, not live trapped in a single system.

The Real Limitation Isn’t MAM – It’s Search Design:

Finding clips in old-school systems means spotting exact matches. A video stays hidden when labels miss the mark. Teams using separate terms pull up uneven answers. Missing details in data bury the material just like it vanished.

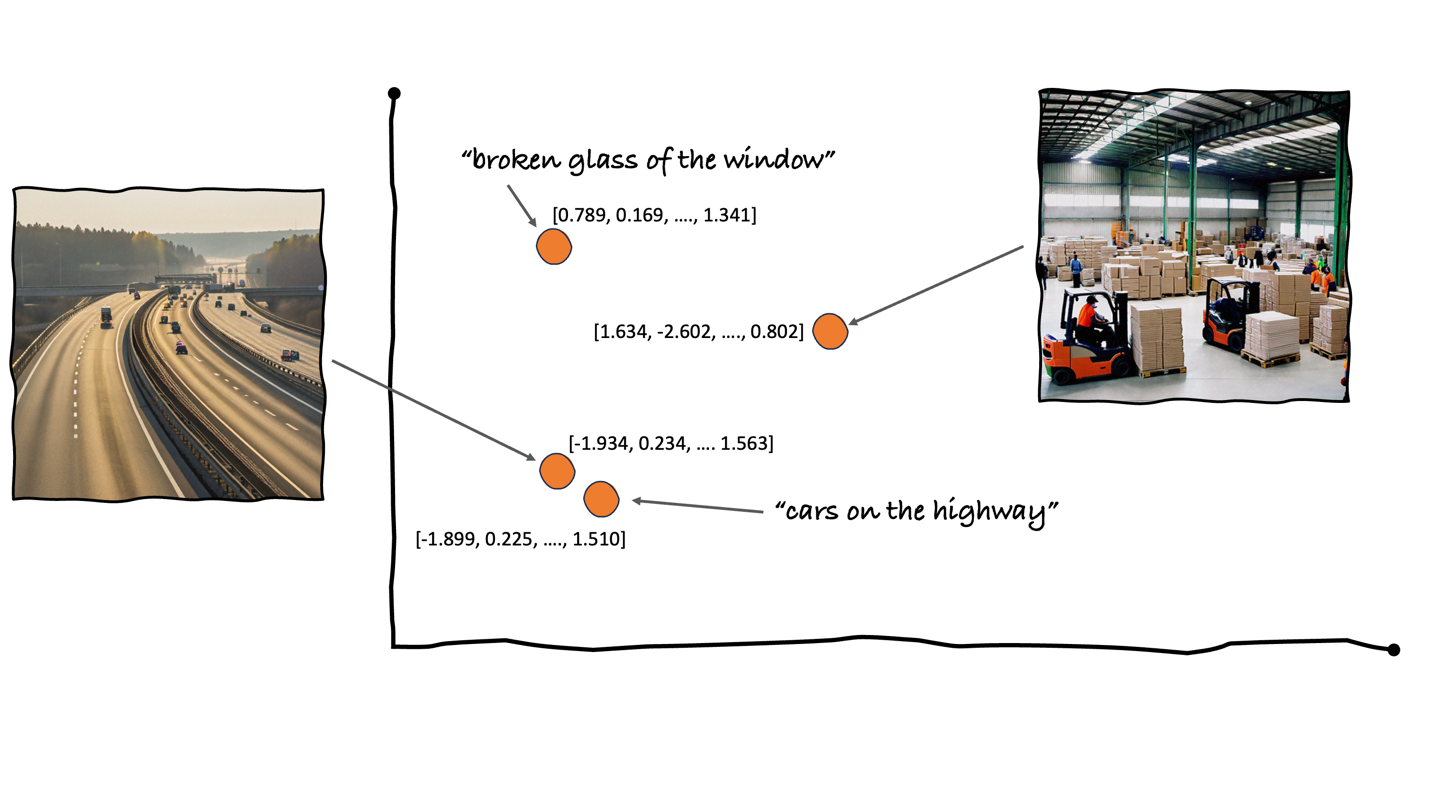

A fresh way to find videos begins now. Not through keywords, but by grasping intent. What unfolds on screen becomes clear to the system. Speech, actions, visuals – all make sense together. Searching feels fluid, like describing a memory. Prior tags or file names? No need to recall them.

Only once freed from one fixed Media Asset Management setup does semantic video search start working well.

Why Semantic Video Search Should Be MAM-Agnostic:

Picture this – most organizations aren’t using one single, clean MAM environment. Over time, they accumulate:

- Multiple archives.

- Different storage systems.

- Legacy and modern MAMs.

- Cloud and on-premise setups.

Fresh starts aren’t practical when they want’s to improve search quality.

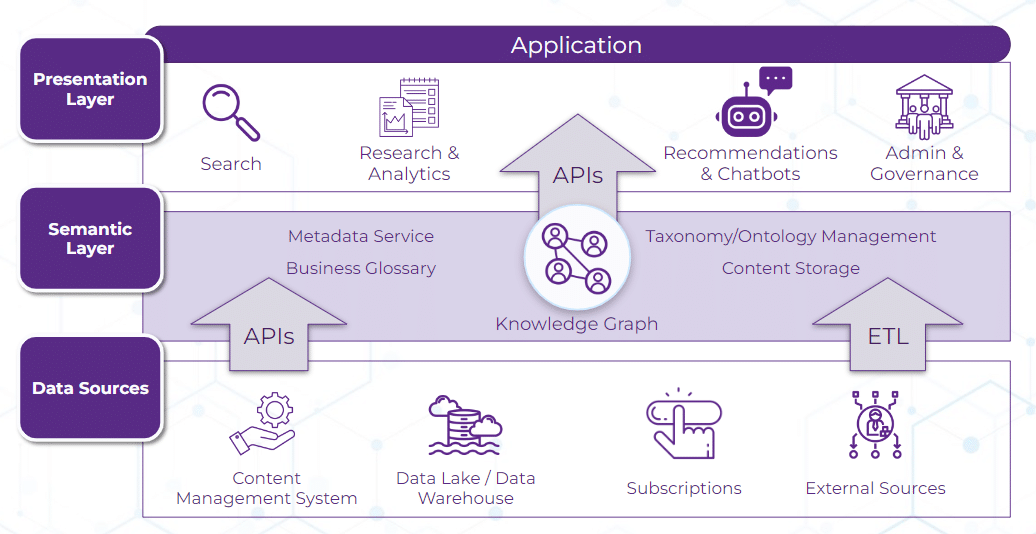

A MAM-agnostic Semantic Video Search API works across this complexity. It does not demand a new Media Asset Management system or a complete migration. By linking into current tools, it brings smarter search. Smarts get layered over old frameworks instead of tossing them out.

Here’s when getting systems to work together really matters.

Prioritizing Interoperability Over Replacement:

What matters now isn’t swapping out tools – but getting them to work together smoothly.

By prioritizing open standards and robust APIs, semantic video search can integrate smoothly with any Media Asset Management setup. The result is:

- Less friction between tools.

- Faster adoption across teams.

- Freedom to evolve, even if starting with a different provider. Moving on is possible whenever needed.

Just like that, AI Media Discovery runs unseen, lifting old routines without breaking stride.

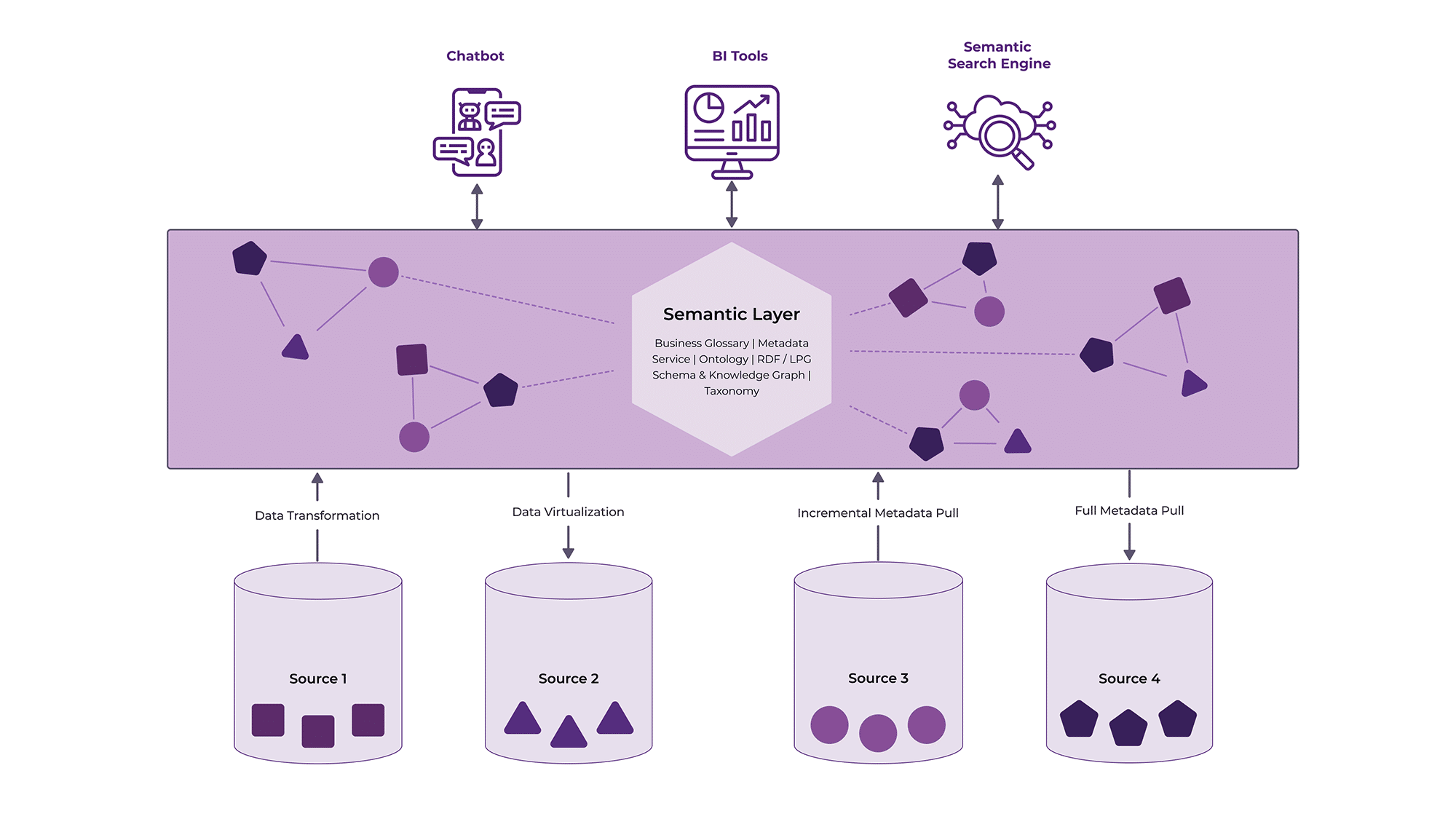

One Semantic Layer Across Multiple Media Archives:

Imagine a tool that understands meaning, no matter where files are stored. It works the same whether your videos sit in one place or spread across ten systems. Think of it like a translator for searching – smooth, steady, always speaking the right language. Wherever data hides, the way you look stays familiar.

Most folks skip this detail entirely. It slips under the radar without much thought at all.

- Storage location of the file.

- Who takes care of running it.

- What labels were attached back then.

Searching happens based on what people actually want.

For big groups, it matters a lot when editors, reporters, promoters, or analysts handle shared material differently.

Keywords to Video Search with Meaning (Contextual Video Search)

Keywords are fragile. Context is durable. Contextual Video Search understands:

- What appears in the video

- Who is speaking, and what is being said

- What is happening in that specific moment

Instead of hunting for exact terms, you search by ideas, moments, or intent, and the system fetches the most relevant scene, instantly. This becomes critical in large video archives where manual tags are incomplete, inconsistent, or missing altogether.

The real strength of Semantic Video Search lies in moving beyond keywords to scene-level understanding.

That’s exactly why it works best as a layer on top of Media Asset Management, rather than being buried inside it.

Why Video Content Indexing Should Be Independent?

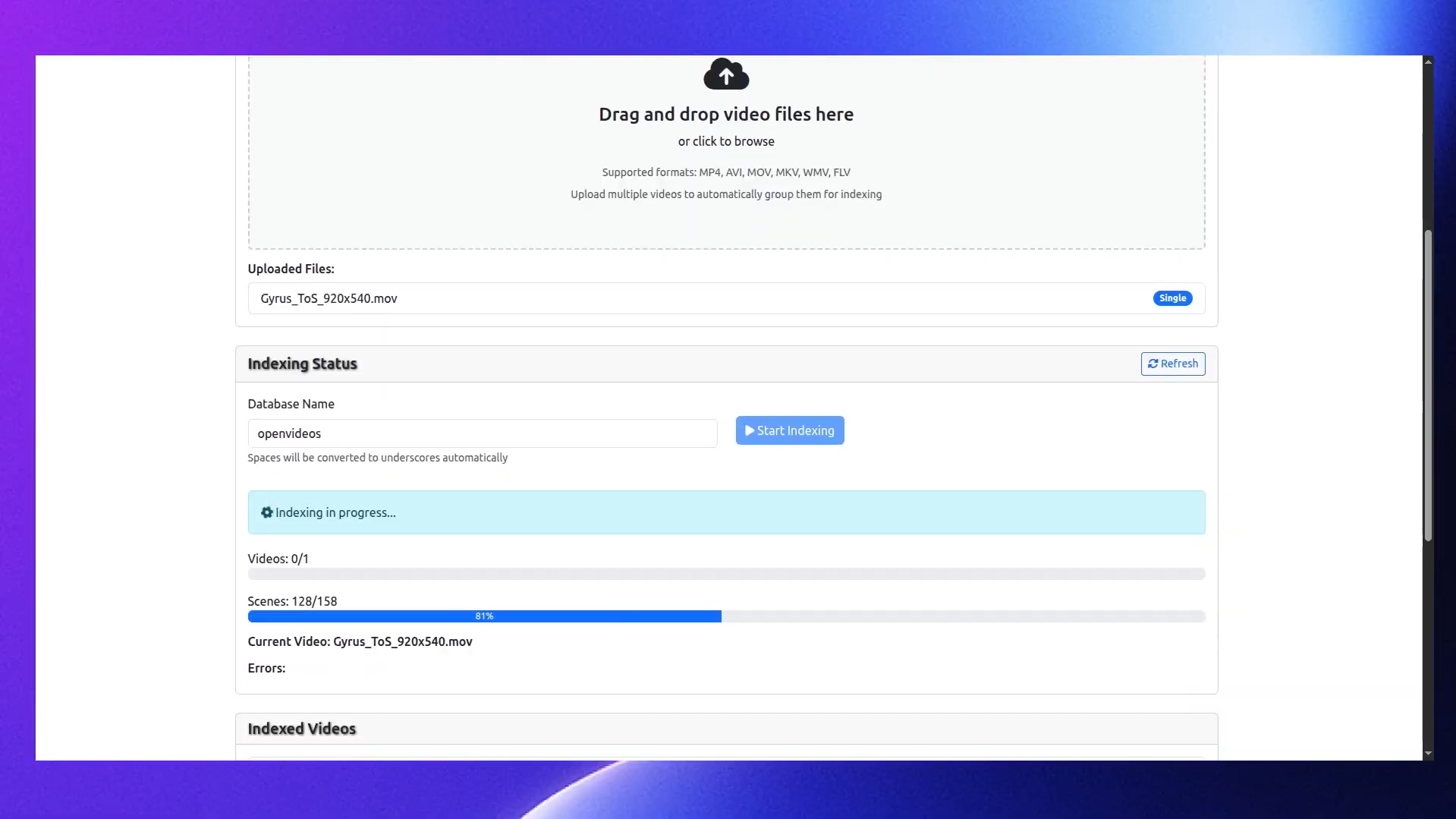

Video indexing helps systems understand what’s inside a video – visuals, audio, and speech. So content can be found by meaning, not just keywords.

When indexing is kept separate, videos can be indexed once and used across any MAM or media platform. The indexed data works independently, no matter where the video is stored or accessed.

Now operations run faster because the media library has become simpler, cost-effective, and one piece feeds many tasks. Savings add up when files get reused instead of remade each time. Workflows feel smoother since assets load more quickly across online stores. The whole setup adapts easily as needs shift.

This makes video search flexible, easy to integrate, and free from platform dependency.

Where Gyrus Semantic Video Search Fits In?

Gyrus Semantic Video Search is built as an independent semantic layer that works alongside existing Media Asset Management systems.

What happens inside Gyrus system stays flexible. It connects through APIs, grasps what content means, then delivers useful answers. Old setups keep running as they are, untouched.

How storage works? Not its concern. Because it works alongside existing systems, companies can upgrade search capabilities without a full overhaul.

Why This Affects Teams Beyond Technology?

Finding things faster doesn’t only upgrade tools – work habits shift because of it.

When semantic search works regardless of MAM:

- Finding content takes less time when you’re an editor.

- Finding old stories again? Reporters make better use of stored material these days.

- Content teams avoid duplicate work.

- Decision-makers gain visibility into hidden assets.

A Modern MAM Is an Orchestrator, Not a Monolith.

Outdated thinking says a single tool can handle every task. Today’s approach? Separate pieces fit together like puzzle parts. Each piece does its job well. Connections between them happen through APIs. No need for one giant solution.

Right there in the mix – Semantic search fits perfectly into this model. It does not replace MAMs. It enhances them.

A Truly Modern Mam Ecosystem:

- Orchestrates existing tools.

- Adapts to new technologies.

- Evolves without disruption.

Semantic Media Search becomes the connective tissue that brings meaning across the entire media landscape.

Final Thought:

Loose boundaries let semantic video search perform at its peak. Without tying itself to one Media Asset Management system, flexibility grows – so does room to expand, adapt, stay relevant.

Finding hidden meaning in old files becomes possible when one Semantic Media Search API taps into every storage spot. Because semantic search is API-driven, it can plug into any MAM platform – without changing existing ingest, storage, or workflows. Even in organizations using multiple MAM systems, the same search and indexing layer works seamlessly across all of them.